Instagram experiments with benign censorship in bid to tackle bullying

Social media platform Instagram has implemented a couple of new tools that aim to take a softer approach to protecting users from harassment.

July 9, 2019

Social media platform Instagram has implemented a couple of new tools that aim to take a softer approach to protecting users from harassment.

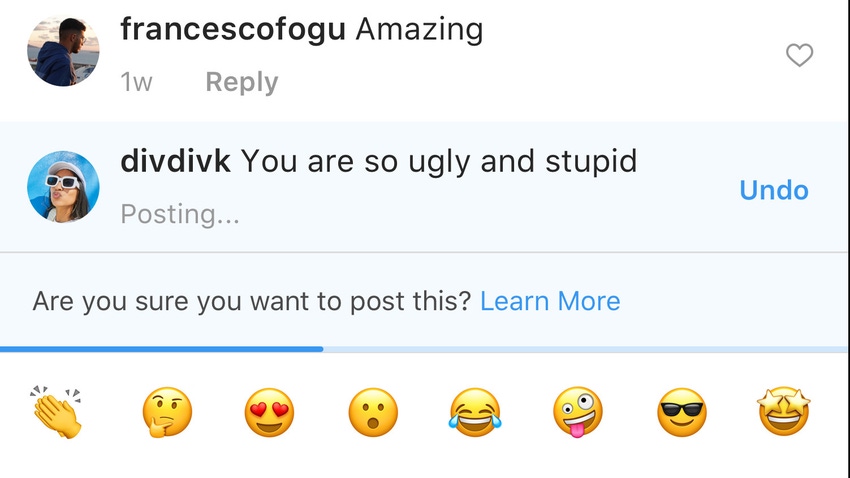

The first is designed to ‘encourage positive interactions’ but, for once, this is less Orwellian than it sounds. Instagram is using artificial intelligence to identify comments that may be considered ‘offensive’ as they are written and then presents a notification to that effect, inviting the person to think twice before publishing the comment.

The second offers to ‘protect your account from unwanted interactions’ and is even given a name: Restrict. Once you ‘restrict’ another users it makes any comments they make on your Instagram posts invisible to everyone except themselves, as well as preventing them from knowing when you’ve read their direct messages or when you’re active on the platform.

Instagram seems to be trying to minimize harm without falling into the trap of the kind of draconian blanket bans that have reflected badly on its parent company. It’s also sensitive to the fact that, in the case of bullying, victims are often reluctant to report their abusers for fear of exacerbating the problem. So offering a tool that restricts contact with another person without them knowing makes sense, especially since it doesn’t offer the power of outright censorship.

The same could also be said for the offensiveness warning. A user is apparently free to ignore it but maybe just being forced to pause before posting will prevent a lot of the spite and vitriol that social media seems to facilitate from making its way into the public domain.

Striking the right balance between freedom and safety is a core dilemma facing all social media platforms. To date they’ve largely done a bad job and have tended to over-react to specific events rather than take the lead through their own policies and technology. This Instagram initiative seems to be an attempt to change that and so should be applauded.

The potential downsides, as ever, concern vague definitions and mission creep. The perception of a comment as being offensive in intrinsically subjective, so it’s at the very least unsettling to see such a call being made by machines. Also while such messages can be ignored now, who’s to say the enforcement of this offense filter won’t become more heavy-handed in future, once everyone has accepted it?

Finally there are also distinct surveillance and data privacy implications. Does Instagram keep track of how often a user posts something its AI has deemed offensive and how often it ignores its advice to be less so? Will there eventually be more severe consequences for such behaviour? The problem with imposing any restrictions, however initially benign, is that they create the thin end of the wedge. If Instagram starts punishing its users because its HAL-like AI disapproves of them this move could start to backfire significantly.

About the Author(s)

You May Also Like

.png?width=300&auto=webp&quality=80&disable=upscale)

_1.jpg?width=300&auto=webp&quality=80&disable=upscale)

.png?width=800&auto=webp&quality=80&disable=upscale)