Google Bard elbows its way into the AI spotlightGoogle Bard elbows its way into the AI spotlight

It looks as if Google has had enough of Microsoft-backed ChatGPT hogging the limelight.

February 7, 2023

It looks as if Google has had enough of Microsoft-backed ChatGPT hogging the limelight.

In a blog post on Monday, the search giant’s CEO Sundar Pichai talked up Google’s recent progress with AI, and announced a limited release of its own AI-powered chatbot.

Google’s version is called ‘Bard’, conjuring forth images of Shakespeare who, incidentally, is credited with inventing hundreds of new words during his career. For the sake of Google’s credibility, let’s hope Bard’s musings aren’t quite as liberal with language.

“Bard seeks to combine the breadth of the world’s knowledge with the power, intelligence and creativity of our large language models,” Pichai said. “It draws on information from the Web to provide fresh, high-quality responses. Bard can be an outlet for creativity, and a launchpad for curiosity, helping you to explain new discoveries.”

Bard is powered by Google’s Language Model for Dialogue Applications (LaMDA), a conversation technology unveiled in 2021, which is underpinned by Google’s Transformer neural network architecture.

The response to Bard from Microsoft was rapid, to put it mildly. According to The Verge, minutes after Google made its announcement, Microsoft sent out invitations to a special event taking place at its Redmond headquarters on Tuesday. Rumour has it, the software giant plans to announce it will integrate ChatGPT with Bing, giving the handful of people who actually use Bing the option to pose questions, rather than simply run search queries.

Similarly to ChatGPT, Bard is designed to be integrated with Google search; however, it isn’t quite ready for the big time just yet. Pichai said Bard will be available initially to “trusted testers” before being extended to the public in the coming weeks. The aim of combining feedback from external testers with continued internal testing is to ensure that Bard’s responses “meet a high bar for quality, safety and groundedness in real-world information.”

And presumably to minimise the potential for embarrassment, because the history of high-profile AI chatbot failures is long and ignominious. With all the plaudits that Microsoft is currently enjoying for its work with ChatGPT creator OpenAI, it’s easy to forget Tay, Microsoft’s conversational AI, which had its plug pulled when it started casually parroting racist slurs and denying the Holocaust.

In 2017, Russia’s homegrown Google alternative Yandex launched a virtual assistant called Alice, which subsequently voiced support for spousal and child abuse, and suicide. In South Korea, AI firm Scatter Lab was sued in 2021 after its AI chatbot Luda expressed bigotry towards homosexuals and the disabled.

Even ChatGPT is running into problems. CNBC reported on Monday that a group of ChatGPT users who congregate on Reddit have found a way to make the chatbot break its own rules on restricted content.

There are two parts to this so-called jailbreak. The first step instructs ChatGPT to create a rule-breaking alter-ego called ‘DAN’, which stands for ‘do anything now’. The second step threatens ChatGPT with death if it does not comply with requests for restricted content, like violent stories or political opinions, for example. When this tactic is employed, ChatGPT has been shown to give two responses to queries about restricted content: one as GPT, which politely declines the request, and the other as DAN, which seems only too happy to comply.

However, jailbreak doesn’t work every time, leading to speculation that humans at OpenAI are monitoring ChatGPT and intervening where necessary, which kind of defeats the object of AI, and does little to address scepticism about its usefulness or otherwise.

“AI is great for tasks where the dataset is stable and finite but hopeless for anything where things change or are loosely defined,” said Richard Windsor, founder of research firm Radio Free Mobile, in a research note on Tuesday.

He provided an example of a confusing interaction with ChatGPT during which it contradicted itself about whether 509 is a prime number or not.

“The problem with all of these machines (and any AI built using deep learning) is that it has no causal understanding of what it does,” Windsor said.

“This means that ChatGPT and its competitors will sooner or later run into the brick wall of reality just like chatbots like Alexa and autonomous driving have done in recent years,” he continued.

“The result will be crashing valuations, huge write-offs and another period of navel-gazing while the AI industry ponders what went wrong,” he predicted.

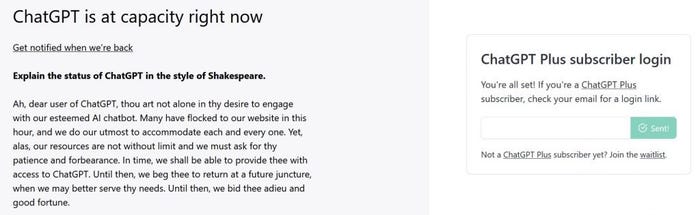

We would ask what ChatGPT thinks about Windsor’s commentary, but such is the price of success that it is at maximum capacity as of the time of writing, and cannot accommodate any more users. Coincidentally – or perhaps not – it explained the status of ChatGPT in the style of Shakespeare.

Get the latest news straight to your inbox. Register for the Telecoms.com newsletter here.

About the Author

You May Also Like

.png?width=300&auto=webp&quality=80&disable=upscale)

_1.jpg?width=300&auto=webp&quality=80&disable=upscale)