ChatGPT and other AI apps are going to create new winners and losers in the job marketChatGPT and other AI apps are going to create new winners and losers in the job market

Advanced AI services may be coming for content creators' jobs, but they will also be free to busy themselves with art or other ‘more human pursuits’, says CEO of conversational AI firm Gupshup Beerud Sheth.

January 16, 2023

Advanced AI services may be coming for content creators’ jobs, but they will also be free to busy themselves with art or other ‘more human pursuits’, says CEO of conversational AI firm Gupshup Beerud Sheth.

AI chat bots have been around for a while in varying states of usefulness, as anyone who has used/tolerated them in lieu of a human customer service rep. But when Open AI’s ChatGPT burst onto the scene recently, it created a buzz in much wider circles than the tech press, with all sorts of mainstream outlets suddenly noticing that it looks like conversational algorithms have suddenly got a lot more sophisticated.

Whenever AI is discussed in a broad way, it doesn’t take long for people to ask if they’re jobs are about to be dissolved in this brave new world of shrewd software. ChatGPT, and services like Jasper, seem able to create at the very least passable passages of text that could be applied for all sorts of content creation or marketing services, even if it does need a polish before publishing. As well as truck drivers and customer service agents, it looks like AI is coming for the laptop class as well.

Whether or not this particular app, or some future jumped up algorithm with a similar MO, is going to single handedly render all of us honest scribes redundant remains to be seen – but the technology is bound to continue getting better. And there will be societal impacts on economies like the UK’s if it turns out firms can produce all their content/marketing/sales/comms/customer service needs adequately through a software subscription as opposed to obsolete, flesh and blood, employees – who tend to have all sorts of tiresome needs like salaries, insurance, half-yearly reviews, pensions, and water.

On the other hand, there may be unforeseen benefits to unleashing mega smart natural language based software on society – rarely do technologies come along with all pros and no cons, or visa versa.

To help us wade through some of these big issues, we spoke to Beerud Sheth Co-founder and CEO of Gupshup, a ‘conversational AI unicorn’ which apparently powers over 7 billion messages per month for thousands of large and small businesses.

What is the nature of the breakthrough that has led ChatGPT to seem more ‘intelligent’?

It’s the combination of multiple factors that have come together at this point in time. One is computing power – Moore’s Law continues to make its march across the tech industry, servers are getting stronger and stronger. And then there was a key breakthrough in deep learning about 10 years ago now, on how to create these more advanced neural networks. The neural net idea has been around for decades, but creating multiple levels, and therefore deep across the layers – that deep learning idea was very powerful.

This literally just came together over the last six months, and it’s really blown away human perception of AI capability.

And then really it’s just combining those two things, which took a little bit of time. These neural networks were built on really, really large data sets which was only possible, literally until the last year or so. But what we found was when you get beyond a certain number of parameters, as they measure the size of the network, once it becomes large enough, and once it consumes large enough data sets – it basically reads the whole internet practically, or at least a large fraction thereof – we discovered that it’s good enough as perceived by human tests. It really passes the Turing test.

So the current phase of AI, what’s called LLM (Large Language Models) this literally just came together over the last six months, and it’s really blown away human perception of AI capability. That’s really a big inflection point in the capability of AI.

You mentioned that some of it has passed the Turing test…

I am using it a little loosely, so not a technical definition, but loosely speaking the Turing test is whether an AI software can fool the human into thinking that it’s a human like response, that it’s a human on the other side that’s providing that response. And the point is when you now ask these large language models to, for example, write a blog post, or to act like a teacher and answer some questions, or to act like a customer support person and answer some questions, these questions are amazingly, surprisingly good.

It feels like there is a human sitting on the other side writing those things and that’s validated by the fact that you now have a whole bunch of companies – Jasper is one which does copywriting and a whole bunch of copywriters are using it, and it’s doing $100 million in revenue. It really is just a thin layer on having ChatGPT write advertising copy, blog posts, and so on.

So that’s the point I was trying to make, you finally have on a mass scale enough people saying that in their respective contexts, GPT-3 for example, can create high quality human like advertising copy in that case, but many other verticals also are being developed for that. In short, what I’m trying to say is it just reached a level of capability that is pretty convincing for most humans across many industries.

Who is this good for?

I’m your stereotypical Silicon Valley entrepreneur, so I’m going say in general it’s good for society. I’m an optimist at heart. It may not be uniformly good for every member of society, but in general, I think it takes the knowledge base or the capability of humans to a whole new level. Obviously it’s great in terms of being able to write copy and being able to automate a whole lot of language tasks, whether it’s summarising large articles, whether it’s writing simplistic articles around sports or financial events, whether it’s writing sales, emails, marketing emails, almost anything to do with human language. And that’s a that’s a very vast class of activities.

That’s the Large Language Models, but then there are other variations of the models that are also getting really good at dealing with images and videos. There is this broad category called generative AI – it can generate text, it can generate videos, it can generate images, and so on. So now you can create artwork, you can create maybe movies and it becomes a lot simpler, a lot faster, a lot easier.

Some of the grunt work that caused a lot of human frustration will go away, which is probably a good thing. But some jobs that people like to do might also go away.

I think it’s gradually going to pervade almost every aspect of human activity. When that happens, the actual social impact is clearly a double edged sword. On the one hand, the positive thing is it amplifies human capability, which means each human can do more. The flip side of that is maybe we’ll need less humans doing that. Or we’ll just need the humans that are building these models more than the humans that actually do the work that the models can now do. Almost any technology historically has impacted jobs, some jobs sort of go away, but it creates new jobs.

I think the hard part from our current perspective is it’s easy to see some of the jobs that will get impacted and will go away. It’s a little harder to see the jobs that will come on the other side of this thing. But training AI models is going to become a new job that didn’t exist until yesterday. Some of the grunt work that caused a lot of human frustration will go away, which is probably a good thing. But some jobs that people like to do might also go away.

There’s no doubt it’ll impact… I don’t think anyone can claim to know exactly all the impacts that will have, but I think in general, there’s two things I that will happen. One, it helps to have faith that that technology moves society forward – it makes us more efficient, helps us do more things. But perhaps on the political side there may be huge inequality where the people building the models, for example, might accrue a lot of the economic value versus a whole bunch of people who are doing it and that might create a political problem around redistribution. There’s certainly no question that there’ll be some issues we’ll have to address going forward as well.

Wherever AI is being applied usually the question is asked, ‘what does that mean for the people who are manually doing these jobs?’ When ChatGPT hit the headlines, lots of people were immediately saying what about content writers and marketing copywriters? Are those jobs going to go? Their fears aren’t for nothing by the sounds of it…

Yeah… here’s what’s happening – if you just take some of these copywriting services companies, their revenues are increasing, and increasing dramatically, which means there’s plenty of humans paying them to use these services to incorporate it into what they do. So on the one hand, certain copywriters are getting impacted, but on the other from what I’ve heard, there are lots of other non-native English speaking copywriters, who now suddenly can be as good as anyone else in the world.

Because they can write something and then have the language model make sure it’s in perfect English – it flows nicely, it’s perfect and so on. So I think yeah it is going to create new winners and losers. Just like the car, some of the buggy riders went away, but then we had drivers. But AI is a little different, because AI is much more general purpose, it’s not one aspect of human activity. It’s basically human intelligence – it’s replicating human intelligence across a very broad set of fields. Like I said, the aggregate value to society… there’s no question.

There are lots of other non-native English speaking copywriters, who now suddenly can be as good as anyone else in the world… it is going to create new winners and losers. Just like the car, some of the buggy riders went away, but then we had drivers.

As things become more automated, they normally become cheaper. They become more affordable, more of us as consumers will be able to afford more things. Think about Moore’s law – it’s a very technical term, but fundamentally computers for the last 40 years or more have been getting better and cheaper at the same time. There’s very few industries where things happen that way. We’ve been doing that on the on the computing side, and now human activity will get better and cheaper, at the same time, which means more of us will be able to afford more – it could be entertainment, personalised services personalised tutoring, personalised shopping, and so on. As consumers, it’ll be massively valuable. But as producers, it begs the question who’s doing the producing? And if it is AI, what does it do to jobs? One could imagine one scenario is that a lot of the AIs can do a lot of the work, then most people can not worry about it and pursue maybe art or other more human pursuits, if you will.

So long as, like I said, the political issue is addressed. Do we have Universal Basic Income? Do we have other means? And so on. It’s not very different from Scandinavia or the Middle East where they find the oil wealth which produces enough value for society and so long as governments can spread the goods across a broader base of society, ultimately everybody is better off. So I think there are some real challenges, but I am optimistic that human society will be able to take advantage of it and address it.

Universal Basic Income is often mentioned in conversations around AI and what it does to jobs, though some of the creative things like art that people might do in lieu of having a job is now actually being produced by AI as well. What’s the limit to what AI can do? What will you always need a human for, or what is AI not going to be able to replace humans at doing?

There are many books written on this subject, but I think certainly there’s a whole class of activity around empathy and caring. So whether it’s nursing or childcare or eldercare or even human entertainment and so on, I think a lot of it really just boils down to having this unique empathy and none of the AI indicates that ability. They could simulate empathy and caring in certain situations, but there are situations where it just won’t be able to deal with that.

There’s another set of areas – extrapolating, strategy, creativity. Not of the kind where ‘okay, you’ve seen 100 Cats maybe can you draw yet another cat’. Of course AI will be able to do it, but creative business strategies, marketing strategies in a way it ties to empathy. Being able to create something new that other humans would love, in a way that it wasn’t imagined before. Maybe interpersonal activities.

So there’s a few classes of things where we expect humans and AI together will be much better than humans alone or AI alone. The issue is not that every human will get replaced. I don’t think that’s the worry at all. I think the fear is that we’re going to just need less humans overall. If you can automate let’s say, medical diagnosis or imaging radiographs, it doesn’t mean every doctor will be will be replaced. For example, AI could do the work with a human supervisor. It’s a double edged sword – It amplifies the capability of fewer doctors serving the needs of many more people. Which is good – for the first time you’d have billions of people actually having access to medical care, which today except in the Western world it’s not universal by any stretch.

There are there are some sort of negative use cases as well that we have to be careful about – an unrestricted AI, just like unrestricted weaponry can cause all kinds of problems.

In some cases it may be better and we may direct it in the way of bringing basic services to billions of people around the world because now you can actually deliver it at a lower cost, to be able to do it in Africa and India, at a lower price and providing services that previously were only available in the Western world. That would be a huge boon and enormous value to people. In the beginning, perhaps there’ll be just be greater value and then over time the ratios will change, the number of doctors per patient, the number of teachers per student and so on will adjust over time. There’s a ton of good and overall in aggregate, I’m optimistic that it’s more good than not. There are there are some sort of negative use cases as well that we have to be careful about – an unrestricted AI, just like unrestricted weaponry can cause all kinds of problems. So you need to keep it in safe hands, with good values and such. So there’s a lot of work for us to do.

How do you think we do keep it in safe hands? One potential danger that occurred to me was the effect on cybersecurity, fraud and phishing. You could see how some of this could go towards conning people out of giving up their details, for example.

If you go beyond basic rules and basic techniques, it ultimately boils down to this article of faith which says that are there more good people in the world than bad people? So long as that holds, I think we are fine. If you think about blockchain, it’s based on the simple premise that there are more good people than bad people. Because everybody is creating these blocks in the chain and technically anybody can cheat. But so long as more than half the people are good, they will always have more computers and more power than the bad ones.

Similarly, so long as more of the good people have the weapons than the bad ones, I think that acts as a deterrent that protects us. In the same way, so long as more of the good people have better AI than the bad ones. And I think the reason why that happens is a lot of these advances inherently are not possible unless you have sort of an academic environment, a collaborative environment, an open environment and then some governance practices and policies.

There are techniques for removing human bias – when you train AI on the last 1000 years of human documents, it’s very biased. The text is very biased. So you’ve got to explicitly remove gender bias, racial bias and many other biases from that.

What gives you hope is with Open AI or some of these other Silicon Valley companies is the people creating it themselves are the most conscious of its of its power and its capabilities. And there’s already some governance mechanisms in place. There’s a lot of conversations around it. At some point I’m sure the governments will have to get involved as well so that it stays in the right hands.

But I think at least at this point, to harness the kind of computing power and the creativity and the idea and the academic Innovation… it’s all still mostly in (quote unquote) good hands, and I think we ought to keep it that way.

And then specifically there are good techniques – when you train AI, you make sure it has human values infused into the training data sets, you remove some of the negative. There are techniques for removing human bias – when you train AI on the last 1000 years of human documents, it’s very biased. The text is very biased. So you’ve got to explicitly remove gender bias, racial bias and many other biases from that. But the higher level issue is we just need to make sure that more of the good people (quote unquote) have it than the bad.

Are there any specific applications for LLM applications in telecoms?

I think it ties into the telecom sector really neatly because if any think about AI and language, fundamentally it transforms text based and conversational interactions. And all of these conversational interactions are delivered through messaging channels. All of which run through the telecom sector. So whether it’s text messaging or WhatsApp or RCS, or Instagram, the telecom sector provides the foundational channels the pipes on which AI can actually be delivered to every individual in any part of the world.

Like almost any industry, telcos will also have to figure out how they see this disruption… it creates opportunities and threats.

So there’s an infrastructure element, and there’s also a service layer element of it. Today generally telecom services maybe they are overwhelmed by the scale of requests and interactions and so on, but now if they can automate customer support, if they can automate cross sell and upsell, automate optimising consumer bills and things like that, they could deliver a quality of service and have a much greater personalised capability when it comes to handling personal requirements, personal needs, support queries, troubleshooting, roaming, all kinds of things. And in many ways, it could be a lot better. Like almost any industry, telcos will also have to figure out how they see this disruption… it creates opportunities and threats.

And I think you have to lean into the opportunities, and maybe mitigate the threats a little bit. It changes a lot of things, it changes consumer expectations, it changes what people expect and what they want to do and can do, and they have to keep pace with all of it. So there’s a lot of work for telco executives.

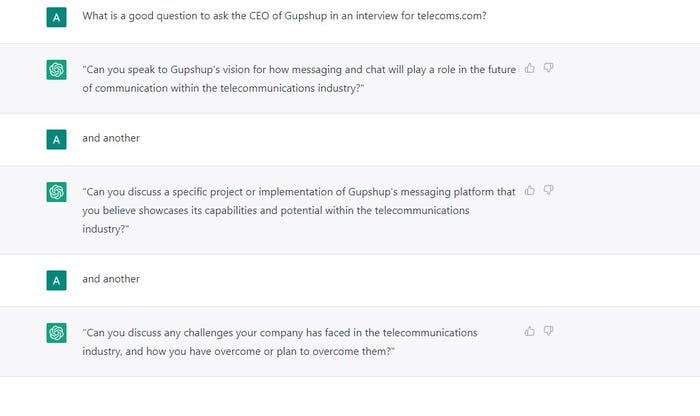

To ascertain just how redundant hacks are in the new wave of conversational AI, in preparation for this interview we asked ChatGPT what questions we should ask Beerud:

We found them a little softball for our tastes, so perhaps we’ve got a few years of usefulness left in us. That is, until they roll out the JEREMY PAXMAN 1000 update.

Get the latest news straight to your inbox. Register for the Telecoms.com newsletter here.

About the Author

You May Also Like

.png?width=300&auto=webp&quality=80&disable=upscale)

_1.jpg?width=300&auto=webp&quality=80&disable=upscale)