Microsoft lags hyperscaler competitors on AI inference servicesMicrosoft lags hyperscaler competitors on AI inference services

New research from Omdia identifies technological advantages Google and AWS currently have over Microsoft when it comes to delivering AI services to cloud customers.

May 9, 2024

Inference refers to getting AI models to actually do useful things, as opposed to the process of training them. For the past year or two most of the headlines have been generated by the progress of models, such as GPT and Gemini. Billions have been spent on ultra-powerful processors, often made by Nvidia, that can churn through zillions of pieces of information and use them to ‘teach’ the model.

But the ultimate purpose of all this is for those models to then be able to perform all kinds of clever, automated tasks that we hitherto relied on expensive, inefficient human beings for. The process of extracting this value from AI models is referred to as ‘inference’, in which artificial intelligence responds to human requests as dictated by its training.

Omdia notes that hyperscalers ‘are likely to be the first point of contact for those establishing an AI model inference infrastructure to serve users.’ So, apart from their relationship with specific models, the matter of how well they’re able to serve up cloud-based inference services to third parties is of great significance to the booming AI market. It’s that area that is explored in Omdia’s AI Inference Products & Strategies of the Hyperscale Cloud Providers report.

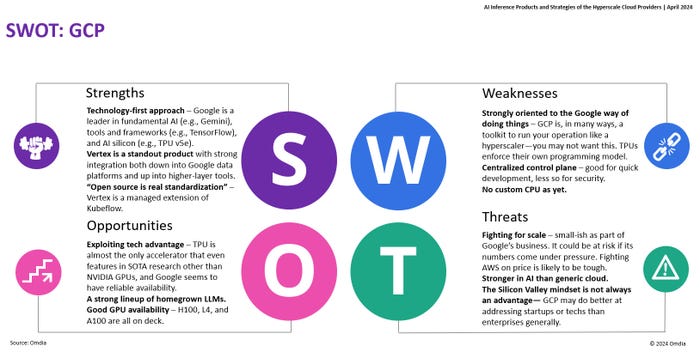

“The competition in this sector is intense,” said Omdia’s Alexander Harrowell. “Google has an edge related to its strength in fundamental AI research, while AWS excels in operational efficiency, but both players have impressive custom silicon.

“Microsoft took a very different path by concentrating on FPGAs initially but is now pivoting urgently to custom chips. However, both Google and Microsoft are considerably behind AWS in CPU inference. AWS’ Graviton 3 and 4 chips were clearly designed to offer a strong AI inference option and staying on a CPU-focused approach is advantageous for simplifying projects.”

Harrowell was kind enough to give us a copy of the full report which, as his quote suggests, takes an especially deep dive into the chip side of things. As you can see from the slides below, while Microsoft arguably controls the company behind the best-known model, its CPU strategy seems lag its competitors, which also have advantages in pricing and broader AI technology. In other words, it’s playing catch-up in this market.

Perhaps cognisant of this, Microsoft has just announced a $3.3 billion investment designed to turn an area of Wisconsin, roughly between Milwaukee and Chicago, into an AI hub. “We will use the power of AI to help advance the next generation of manufacturing companies, skills and jobs in Wisconsin and across the country,” said Brad Smith, Vice Chair and President of Microsoft. “This is what a big company can do to build a strong foundation for every medium, small and start-up company and non-profit everywhere.”

As the quote indicates, there is a strong political element to this move, which we would assume has been encouraged by generous dollop of public money. Apparently even President Biden is going to attend the formal announcement. AI is clearly the dominant technological trend of the foreseeable future and the sector is still in its relative infancy. Microsoft has lots of money and can be expected to spend ever-larger proportions of it on the task of catching up to its main competitors.

About the Author

You May Also Like

.png?width=300&auto=webp&quality=80&disable=upscale)

_1.jpg?width=300&auto=webp&quality=80&disable=upscale)