Cloud Native for Telco: Making IT Technology Feasible at the Network Edge

February 18, 2020

By Paul Miller, Jr. - Vice President, Telecom Market Segment - Wind River

This article is sponsored by Wind River

What Is Cloud Native?

The best-articulated and industry-accepted definition of cloud native comes from the Cloud Native Computing Foundation (CNCF) which defines the term as follows:

Cloud native computing uses an open source software stack to deploy applications as microservices, packaging each part into its own container, and dynamically orchestrating those containers to optimize resource utilization.

Cloud native can be misconstrued as being synonymous with containers. While containers are essential to cloud native environments, they are not the only component. Cloud native technologies can include containers, service meshes, microservices, immutable infrastructure, and declarative APIs. Containers offer a fast, lightweight, and disposable method for deploying and updating workloads and devices at the edge, and can be used independently of a public or private cloud.

Today, containers are predominantly deployed by the telco/IT sector. Over the next five years, other industries are also expected to increase their reliance on containers, including financial services, retail, healthcare, government, and education.

Edge Use Cases Need Cloud Native Environments

For telco, the top use cases for containers and, more generally, cloud native techniques are:

Virtualized radio access network (vRAN): Creates more flexible and efficient mobile networks by centralizing virtualized signal processing functions and distributing radio units at cell sites

Multi-access edge computing (MEC): Distributes compute resources into access networks to improve service experience and support new latency-sensitive applications

Virtual customer premises equipment (vCPE): Virtualized platform delivering managed services to enterprises at lower cost and higher flexibility than hardware appliances

Some CSPs have already started to deploy these use cases in the cloud, mostly via VMs, and are looking to migrate to containers. Unsurprisingly, many primary use cases for containers are also foundational to 5G, which generally will require a greater reliance on software-based networks and cloud native services.

But these 5G use cases are not limited to telco. Cloud native network transformations offer CSPs the opportunity to expand into vertical markets, and serve a wider variety of new customers through cloud native edge deployments.

In transportation, for example, there are compelling 5G use cases for smart transit and rail systems, autonomous shipping, and autonomous vehicles. Within Industry 4.0, companies are exploring 5G-enabled robotics applications, human-machine interfaces (HMIs), and virtual programmable logic controllers (vPLCs). The healthcare sector is also actively looking into 5G for imaging, monitoring, and diagnostics.

The use cases share some commonalities: They all need local compute and processing resources at the edge of the network; they must support high-bandwidth and/or low-latency applications; and require workload consolidation. Also, they are critical infrastructure workloads, meaning they have additional requirements for performance, security, and availability.

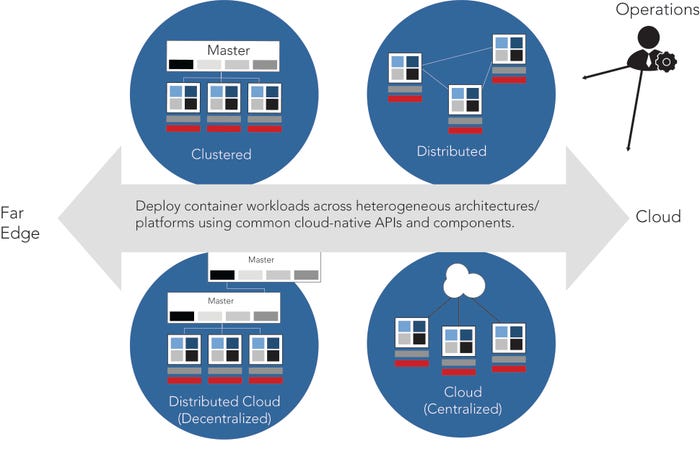

Fig 1: Heterogeneous edge environment

Navigating Heterogeneous Environments at the Edge

There are different network edge locations within what could be called the “broad” edge: from the near edge in telco networks where base stations are deployed to the far edge on premise of factories, enterprises, or stadiums. As the edge evolves, the definition may evolve to include the devices themselves.

Across the broad edge, there will be different cloud architectures deployed, depending on the use case and the legacy infrastructure. For a campus or enterprise, the architecture could be a decentralized, distributed cloud where each site is autonomous. Other edge use cases may require a distributed hierarchy architecture or a centralized cloud where control functions are centrally located.

The result is a heterogeneous architecture environment at the edge, which is significantly different from the more homogeneous environment of a centralized data center.

Cloud native technology helps CSPs navigate these heterogeneous environments by giving them “landing zone” autonomy at the edge. That is, regardless of the architecture or edge location, a cloud native environment allows CSPs to deploy application container workloads across heterogeneous architectures and platforms using common cloud native APIs and components.

Benefits of Cloud Native Environments

Some key benefits of containers and cloud native environments are in the areas of deployment, operations, management, and development of applications. Altogether, cloud native fosters a DevOps approach to delivering applications and services.

More specifically, cloud native benefits include extra security and isolation from the host environment, easy version control in software configuration management, a consistent environment from development to production, and a smaller footprint. It allows developers to use their preferred toolsets and enables rapid spin-up and spin-down as well as easy image updates. It facilitates portability to run on all major distributions while also eliminating environmental inconsistencies.

Depending on who is involved in application deployment, cloud native presents different advantages, as follows:

Infrastructure provider: With containers, applications can be abstracted away from the environment, so that people involved in infrastructure provisioning need not be concerned with the specific workloads.

Application manager: Cloud native allows quick, easy, and consistent application deployments, regardless of the target architecture, and replaces complex upgrades with ephemeral updates. Day 2 operations managers can easily handle the deployment, monitoring, and lifecycle of the workloads.

Developers: Containers facilitate DevOps by easing the development, testing, deployment, and overall management of applications. Developers can design and test once and then deploy everywhere. Also, containers are lighter weight than VMs. They start up much faster and use much less memory compared to booting an entire operating system (OS).

Challenges of Edge Cloud Deployments

As CSPs consider edge deployment strategies, there are four main challenges to overcome:

Complexity: With varied use cases and heterogeneous environment, edge cloud deployments are inevitably complex, especially in the areas of operations and management. Consider also the challenges of a fast-changing cloud native landscape, where new open source projects are launched almost weekly.

Diversity: There is no one-size-fits-all approach for edge cloud deployments. There are major differences between operational technology (OT) and information technology (IT) environments. Each has different latencies, bandwidth, and scale requirements, etc.

Cost: With potentially thousands of edge servers deployed, hardware costs can exceed five times what it costs to deploy an application in a data center. In some cases, a worker node might require four servers to host control planes, storage, and compute, and these will require extra fans, discs, and cooling.

Security and performance: Since many edge sites don’t have the physical security that a large data center would, security needs to be built into the application and infrastructure. And the edge clouds must meet telco and other critical infrastructure performance requirements.

Fig 2: Distributed edge cloud topography

Before diving into the challenges, it’s important to establish what an edge cloud topology looks like. At the edge, there is likely to be a multitier, or n-tier, architecture with a distributed control plane, where the provisioning, lifecycle management, logging, and security are performed. There are also edge servers and worker nodes. The edge servers are like mini data centers with lightweight control planes that manage the worker nodes, which could be located at the base station in a mobile access network or on premise at an enterprise. The worker nodes run the application workloads, which can be anything from telco apps to OT-based workloads, as in factory automation or transit system management.

Where the workload is deployed depends on the latency requirements for the application. The lower the latency required, the worker node will be deployed as far out to the edge as possible. As nodes are further out, the requirement for lightweight control plane and smaller physical footprint becomes more important.

Given the topology of distributed edge clouds, there are certain requirements needed to deploy edge cloud costs efficiently:

Workload orchestration: CSPs need a clear determination of where and how an application workload should be processed across an n-tier gradient of compute, storage, and network resources provided by the edge cloud.

Zero touch provisioning: An automated system is necessary for managing workload orchestration that will take into account the performance, time, and cost requirements of an application.

Centralized management: CSPs need a system-wide view of all the servers and devices in the edge network from a single pane of glass. A centralized control plane can easily provide services such as logging, storage, security, updates, and upgrades as well as lifecycle management.

Edge cloud autonomy: If connectivity is lost between the central site and an edge cloud site, the edge cloud needs enough control plane functionality to carry on performing mission-critical operations.

Massive scale: The solution needs to be able to scale from tens to hundreds of thousands of edge clouds in geographically dispersed locations.

How to Migrate to Cloud Native

Substantial investment in hypervisors and VMs are already in place, but CSPs don’t have to abandon what they’ve already built to start deploying cloud native network functions (CNFs). There are many ways to leverage existing investments, including these migration paths:

Host containers in virtual machines (VMs): By putting containers in VMs, in a kind of hybrid model, CSPs can take advantage of container features while leveraging the benefits of existing architecture of hypervisors and VMs, such as live migration and VM scale and load balancing. Treated as a VM, containers can be managed individually and leverage container orchestration engines, such as Kubernetes.

The downsides of this approach are that it creates a complex environment that can be too heavyweight for an edge cloud deployment, while certain performance aspects may suffer.

Bare metal containers in a VM architecture: In contrast to the hybrid model, this architecture is more like a dual model. It provides a bare metal environment within OpenStack; containers and VMs are separated and managed equally. Containers run natively on the bare metal, without a hypervisor, and are managed by container orchestration engines, which improves performance. This is a flexible method for deploying containers and VMs that allows CSPs to maintain legacy VM investments. But it still has the heavyweight control plane of the OpenStack environment, which is not ideal for edge cloud deployments.

Containers first with VM support when needed: This architecture flips previous models around by containerizing OpenStack services and running them in a container on top of a bare metal Kubernetes cluster. VMs and containers are managed equally and in the same way through Kubernetes. OpenStack can be run but only when needed to support legacy VMs. This solution is more lightweight with a thinner control plane, offers better performance, and enables CSPs to deploy OpenStack containers only when they have VMs that require OpenStack. This architecture is implemented by the StarlingX open source project.

How to Build Your Own Cloud Native Edge Cloud with StarlingX

There are many open source projects dedicated to easing the deployment of edge clouds, such as the CNCF, the Linux Foundation’s Akraino and EdgeX Foundry projects, and the OpenStack Foundation’s AirShip and StarlingX projects.

StarlingX is one of the newest projects, and stands out because it provides a pre-built distribution for edge clouds that removes much of the complexity of building a cloud native platform. It’s a container-based architecture that supports VM use when needed. It addresses some of the diversity and scalability issues of distributed edge cloud deployments with flexibility that is designed for edge and OT use cases. It can scale from a small-footprint single server to a complete mini data center. And it can be architected in a distributed or a centralized model.

StarlingX addresses the key requirements for distributed edge clouds and overcomes the current limitations on OT and edge use cases—namely, zero touch provisioning, workload orchestration, centralized management, edge cloud autonomy, and massive scalability.

Fig 3: Wind River Cloud Platform

Wind River® is focused on accelerating the innovation and disruption at the network edge through this important initiative. As a commercial deployment of StarlingX, Wind River Cloud Platform will be key to enabling new business opportunities and innovative applications across multiple markets.

Conclusion

Cloud native can help future-proof telco networks. As service providers are formulating strategies for delivering edge, OT, and 5G use cases, they should consider deploying a cloud native container strategy to support distributed edge clouds. Deployments at the edge of the network are far more complex than implementations in large data centers, and there are many different ways to arrive at a cloud native solution while also protecting legacy investments. But with the right distributed edge cloud platform, service providers can cost-effectively deliver a wealth of compelling, revenue-generating services.

The article was written by Paul Miller, Jr. As Vice President of the Telecom Market Segment at Wind River, Paul Miller is responsible for the company’s strategic vision and market support for telecommunications TEMs and CSPs. With more than 25 years of telecommunications and advanced applications technology leadership at both large companies and successful startups, he is currently focused on Wind River’s NFV and SDN enabled products for the carrier market.

Read more about:

Vendor SpotlightsYou May Also Like

_1.jpg?width=300&auto=webp&quality=80&disable=upscale)

.png?width=800&auto=webp&quality=80&disable=upscale)