It turns out regulating the data economy is really hard

New data regulations may well define the economy and society over the next few decades, and they are still far from perfect. But before you get too critical, you have to realise just how complicated this thankless job can actually be.

August 20, 2018

New data regulations may well define the economy and society over the next few decades, and they are still far from perfect. But before you get too critical, you have to realise just how complicated this thankless job can actually be.

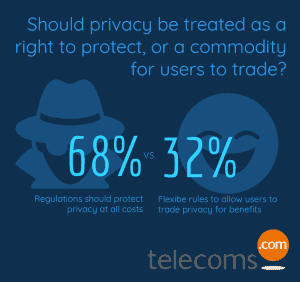

An interesting question to ask is whether privacy should be a right protected at all cost by regulations, or should rules makers allow the user to trade his/her privacy for benefits? This is the foundation of some information businesses, Facebook is a good example, as targeted advertisements allow for the delivery of free services. On a more simplistic level, free TV channels such as ITV having been doing this for years without the hyper-targeted platform.

If data is the oil of the 21st century, rule makers need to figure out how personal information can be used without inhibiting privacy.

“You have to remember it is a very tricky balance to strike,” said Jocelyn Paulley, Director at law firm Gowling WLG. “On the one hand regulators do want to take a flexible approach which allows for innovation, but they do also have the responsibility to create rules which protect the right to privacy, it is a human right after all.”

As Paulley points out, it’s a little bit more complicated than simply just writing down rules and punishments. The issues arise when you try and predict the future. There are so many different paths technologists and innovators are heading down, how do you possibly write iterations for all the different possible outcomes. Regulators certainly don’t have the man-power to undertake such tasks, and they most likely don’t have the competence either.

As Paulley points out, it’s a little bit more complicated than simply just writing down rules and punishments. The issues arise when you try and predict the future. There are so many different paths technologists and innovators are heading down, how do you possibly write iterations for all the different possible outcomes. Regulators certainly don’t have the man-power to undertake such tasks, and they most likely don’t have the competence either.

Today’s approach is about applying flexibility in the rules, while also listening to the community about what developments are likely to emerge in the future. While this leaves grey areas, Paulley highlights there is little choice at the moment. The breadth of developments in the technology world means almost theoretical laws are written, before being applied into specific use cases. Interpretation does create complications, though the last thing regulators want to be (despite doing an excellent impression at times) is a speed bump to progress; sometimes the grey areas just have to be accepted as the lesser of two evils.

“Part of the complications in the UK are that we are a common law society, not a constitutional one,” said Paulley. “At European level, GDPR has been written to allow for future developments and for member states to localise some rules.”

Will this allow for privacy to be treated as a commodity? Perhaps, but more needs to be done to educate the consumer.

This is perhaps the most touchy aspect of data privacy. Regulators might well be open to the idea of users trading their privacy for benefits, but rules are there to make sure consumers are not abused for their ignorance. Understanding the data economy is incredibly difficult, made more thorny by the complexities of terms and conditions. Technology companies muddy the waters intentionally, therefore regulators cannot offer too much flexibility otherwise the protections are not there for the consumer.

If you were to ask the consumer today whether they would trade privacy for free services, they would probably say no until you start laying out the bill. £10 for Facebook, £2 a month for Google, no free Spotify, 50p for the Evening Standard each commute, £2.50 for every game you download on your smartphone, £50 a year for email and cloud storage, 1p a message and 10p a minute for calls on WhatsApp. It starts to add up and suddenly trading privacy becomes an attractive option, but consumers lack an understanding of the mechanics of the digital economy.

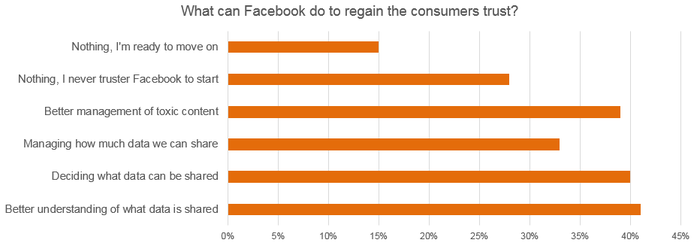

Part of the European Commission’s General Data Protection Regulation (GDPR) is geared at creating a greater level of transparency. With the Cambridge Analytica scandal, part of the reason the consequences were so great for Facebook was due to a lack of transparency. Data science has advanced at a remarkable rate over the last few years, especially when it comes to targeted advertising platforms, but Facebook hadn’t taken the user on the journey with them, explaining how personal information was being used. When the curtain was pulled back, the sight of CEO Mark Zuckerberg manically pulling levers on the big data machine while frantically shouting “Senator, we sell ads” shocked the general public.

By forcing technology companies to collect opt-in from consumers, regulators are also ensuring the consumer receives an education on what it actually means. Companies now have to explicitly state what personal data will actually be used for. Paulley highlighted the hope here is by becoming more transparent, the consumer will trust these companies more, therefore fuelling the data economy. However, there is a risk once the users understand how the machine works they will opt-out of the system. This is perhaps one of the reason companies have not been so forthcoming with the dark arts of data science; the fear of a negative reaction.

This fear has now become a reality at Facebook. By concealing the dark arts of data science, the trust was broken. Facebook had advanced data science so much without taking the consumer on the same journey, the reality of progress was scary. Campaigns such as # DeleteFacebook on Twitter emerged making the consequences of privacy failure very real. Perhaps this was a watershed moment which will ensure companies do not resist the rules and promote transparency themselves; when you are caught out (and it always happens eventually) the consequences are just as bad, if not worse.

GDPR has some promising areas, but there aspects, such as criminal screening in recruitment, which need more clarity in the future. Flexibility is required to promote innovation, but rigidity to safe guard the consumer. Regulators haven’t gotten it right so far, but that isn’t entirely unexpected. Writing rules for the data economy is unchartered territory, and it’s very complicated.

About the Author

You May Also Like

.png?width=300&auto=webp&quality=80&disable=upscale)

_1.jpg?width=300&auto=webp&quality=80&disable=upscale)